The research lab OpenAI has released the full version of a text-generating AI system that experts warned could be used for malicious purposes.

The institute originally announced the system, GPT-2, in February this year, but withheld the full version of the program out of fear it would be used to spread fake news, spam, and disinformation. Since then it’s released smaller, less complex versions of GPT-2 and studied their reception. Others also replicated the work. In a blog post this week, OpenAI now says it’s seen “no strong evidence of misuse” and has released the model in full.

GPT-2 is part of a new breed of text-generation systems that have impressed experts with their ability to generate coherent text from minimal prompts. The system was trained on eight million text documents scraped from the web and responds to text snippets supplied by users. Feed it a fake headline, for example, and it will write a news story; give it the first line of a poem and it’ll supply a whole verse.

It’s tricky to convey exactly how good GPT-2’s output is, but the model frequently produces eerily cogent writing that can often give the appearance of intelligence (though that’s not to say what GPT-2 is doing involves anything we’d recognize as cognition). Play around with the system long enough, though, and its limitations become clear. It particularly suffers with the challenge of long-term coherence; for example, using the names and attributes of characters consistently in a story, or sticking to a single subject in a news article.

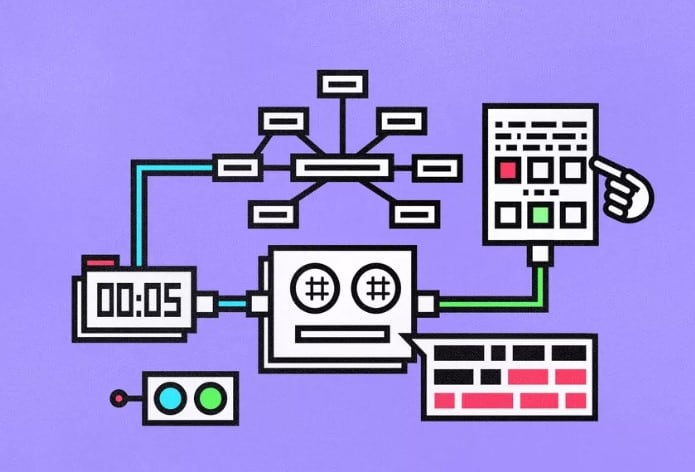

The best way to get a feel for GPT-2’s abilities is to try it out yourself. You can access a web version at TalkToTransformer.com and enter your own prompts. (A “transformer” is a component of machine learning architecture used to create GPT-2 and its fellows.)

Apart from the raw capabilities of GPT-2, the model’s release is notable as part of an ongoing debate about the responsibility of AI researchers to mitigate harm caused by their work. Experts have pointed out that easy access to cutting-edge AI tools can enable malicious actors; a dynamic we’ve seen with the use of deepfakes to generate revenge porn, for example. OpenAI limited the release of its model because of this concern.

However, not everyone applauded the lab’s approach. Many experts criticized the decision, saying it limited the amount of research others could do to mitigate the model’s harms, and that it created unnecessary hype about the dangers of artificial intelligence.

“The words ‘too dangerous’ were casually thrown out here without a lot of thought or experimentation,” researcher Delip Rao told The Verge back in February. “I don’t think [OpenAI] spent enough time proving it was actually dangerous.”