For many people, coding is about telling a computer what to do and having the computer perform those precise actions repeatedly. With the rise of AI tools like ChatGPT, it’s now possible for someone to describe a program in English and have the AI model translate it into working code without ever understanding how the code works. Former OpenAI researcher Andrej Karpathy recently gave this practice a name—”vibe coding”—and it’s gaining traction in tech circles.

The technique, enabled by large language models (LLMs) from companies like OpenAI and Anthropic, has attracted attention for potentially lowering the barrier to entry for software creation. But questions remain about whether the approach can reliably produce code suitable for real-world applications, even as tools like Cursor Composer, GitHub Copilot, and Replit Agent make the process increasingly accessible to non-programmers.

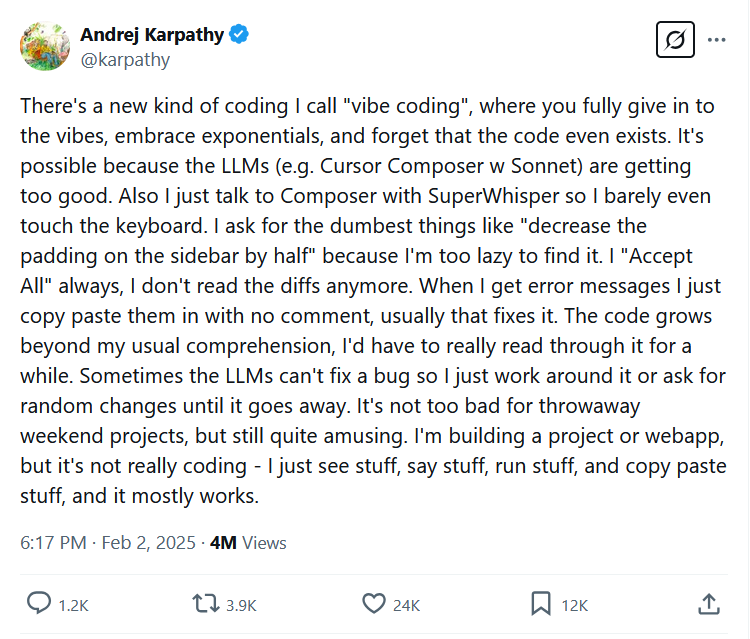

Instead of being about control and precision, vibe coding is all about surrendering to the flow. On February 2, Karpathy introduced the term in a post on X, writing, “There’s a new kind of coding I call ‘vibe coding,’ where you fully give in to the vibes, embrace exponentials, and forget that the code even exists.” He described the process in deliberately casual terms: “I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.”

While vibe coding, if an error occurs, you feed it back into the AI model, accept the changes, hope it works, and repeat the process. Karpathy’s technique stands in stark contrast to traditional software development best practices, which typically emphasize careful planning, testing, and understanding of implementation details.

As Karpathy humorously acknowledged in his original post, the approach is for the ultimate lazy programmer experience: “I ask for the dumbest things, like ‘decrease the padding on the sidebar by half,’ because I’m too lazy to find it myself. I ‘Accept All’ always; I don’t read the diffs anymore.”

At its core, the technique transforms anyone with basic communication skills into a new type of natural language programmer—at least for simple projects. With AI models currently being held back by the amount of code an AI model can digest at once (context size), there tends to be an upper-limit to how complex a vibe-coded software project can get before the human at the wheel becomes a high-level project manager, manually assembling slices of AI-generated code into a larger architecture. But as technical limits expand with each generation of AI models, those limits may one day disappear.

Who are the vibe coders?

There’s no way to know exactly how many people are currently vibe coding their way through either hobby projects or development jobs, but Cursor reported 40,000 paying users in August 2024, and GitHub reported 1.3 million Copilot users just over a year ago (February 2024). While we can’t find user numbers for Replit Agent, the site claims 30 million users, with an unknown percentage using the site’s AI-powered coding agent.

One thing we do know: the approach has particularly gained traction online as a fun way of rapidly prototyping games. Microsoft’s Peter Yang recently demonstrated vibe coding in an X thread by building a simple 3D first-person shooter zombie game through conversational prompts fed into Cursor and Claude 3.7 Sonnet. Yang even used a speech-to-text app so he could verbally describe what he wanted to see and refine the prototype over time.

We’ve been doing some vibe coding ourselves. Multiple Ars staffers have used AI assistants and coding tools for extracurricular hobby projects such as creating small games, crafting bespoke utilities, writing processing scripts, and more. Having a vibe-based code genie can come in handy in unexpected places: Last year, I asked Anthropic’s Claude write a Microsoft Q-BASIC program in MS-DOS that decompressed 200 ZIP files into custom directories, saving me many hours of manual typing work.

Debugging the vibes

With all this vibe coding going on, we had to turn to an expert for some input. Simon Willison, an independent software developer and AI researcher, offered a nuanced perspective on AI-assisted programming in an interview with Ars Technica. “I really enjoy vibe coding,” he said. “It’s a fun way to try out an idea and prove if it can work.”

But there are limits to how far Willison will go. “Vibe coding your way to a production codebase is clearly risky. Most of the work we do as software engineers involves evolving existing systems, where the quality and understandability of the underlying code is crucial.”

At some point, understanding at least some of the code is important because AI-generated code may include bugs, misunderstandings, and confabulations—for example, instances where the AI model generates references to nonexistent functions or libraries.

“Vibe coding is all fun and games until you have to vibe debug,” developer Ben South noted wryly on X, highlighting this fundamental issue.

Willison recently argued on his blog that encountering hallucinations with AI coding tools isn’t as detrimental as embedding false AI-generated information into a written report, because coding tools have built-in fact-checking: If there’s a confabulation, the code won’t work. This provides a natural boundary for vibe coding’s reliability—the code runs or it doesn’t.

Even so, the risk-reward calculation for vibe coding becomes far more complex in professional settings. While a solo developer might accept the trade-offs of vibe coding for personal projects, enterprise environments typically require code maintainability and reliability standards that vibe-coded solutions may struggle to meet. When code doesn’t work as expected, debugging requires understanding what the code is actually doing—precisely the knowledge that vibe coding tends to sidestep.