This week, leading AI lab OpenAI released their latest project: an AI that can play hide-and-seek. It’s the latest example of how, with current machine learning techniques, a very simple setup can produce shockingly sophisticated results.

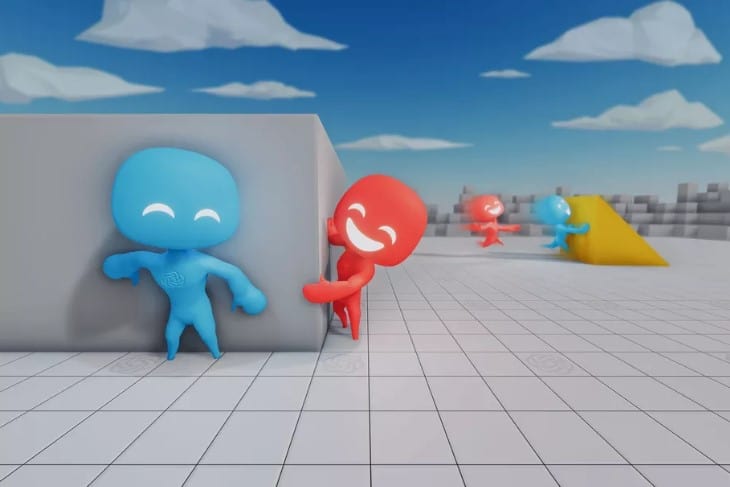

The AI agents play a very simple version of the game, where the “seekers” get points whenever the “hiders” are in their field of view. The “hiders” get a little time at the start to set up a hiding place and get points when they’ve successfully hidden themselves; both sides can move objects around the playing field (like blocks, walls, and ramps) for an advantage.

The results from this simple setup werequite impressive. Over the course of 481 million games of hide-and-seek, the AI seemed to developstrategies and counterstrategies, and the AI agents moved from running around at random to coordinating with their allies to make complicated strategies work. (Along the way, they showed off their ability to break the game physics in unexpected ways, too; more on that below.)

It’s the latest example of how much can be done with a simple AI technique called reinforcement learning, where AI systems get “rewards” for desired behavior and are set loose to learn, over millions of games, the best way to maximize their rewards.

Reinforcement learning is incredibly simple, but the strategic behavior it produces isn’t simple at all. Researchers have in the past leveraged reinforcement learning among other techniques to build AI systems that can play complex wartime strategy games, and some researchers think that highly sophisticated systems could be built just with reinforcement learning. This simple game of hide-and-seek makes for a great example of how reinforcement learning works in action and how simple instructions produce shockingly intelligent behavior. AI capabilities are continuing to march forward, for better or for worse.

The first lesson: how to chase and hide

It may have taken a few million games of hide-and-seek, but eventually the AI agents figured out the basics of the game: chasing one another around the map.

The second lesson: how to build a defensive shelter

AI agents have the ability to “lock” blocks in place. Only the team that locked a block can unlock it. After millions of games of practice, the AI agents learned to build a shelter out of the available blocks; you can see them doing that here. In the shelter, the “seeker” agents can’t find them, so this is a win for the “hiders” — at least until someone comes up with a new idea.

Using ramps to breach a shelter

Millions of generations later, the seekers have figured out how to handle this behavior by the “hiders”: they can drag a ramp over, climb the ramp, and find the hiders.

After a while, the hiders learned a counterattack: they could freeze the ramps in place so the seekers couldn’t move them. OpenAI’s team notes that they thought this would be the end of the game, but they were wrong.

Box surfing to breach shelters

Eventually, seekers learned to push a box over to the frozen ramps, climb onto the box, and “surf” it over to the shelter where they can once again find the hiders.

Defending against box surfing

There’s an obvious counterstrategy for the hiders here: freezing everything around so the seekers have no tools to work with. Indeed, that’s what they learn how to do.

That’s how a game of hide-and-seek between AI agents with millions of games of experience goes. The interesting thing here is that none of the behavior on display was directly taught or even directly rewarded. Agents only get rewards when they win the game. But that simple incentive was enough to encourage lots of creative in-game behavior.

Many AI researchers think that reinforcement learning can be used to solve complicated tasks with real-world implications, too. The way powerful strategic decision-making emerges from simple instructions is promising — but it’s also concerning. Solving problems with reinforcement learning leads, as we’ve seen, to lots of unexpected behavior — charming in a game of hide-and-seek, but potentiallyalarming in a drug meant to treat cancer (if the unintended behavior causes life-threatening complications)or an algorithm meant to improve power plant output (if the AI arranges to exploit some obscure condition in its goals rather than simply provide consistent power).

That’s the hazardous flip side of techniques like reinforcement learning. On the one hand, they’re powerful techniques that can produce advanced behavior from a simple starting point. On the other hand, they’re powerful techniques that can produce unexpected — and sometimes undesired — advanced behavior from a simple starting point.

As AI systems grow more powerful, we need to give careful consideration to how to ensure they do what we want.