I can look into your eyes and see straight to your heart.

It may sound like a sappy sentiment from a Hallmark card. Essentially though, that’s what researchers at Google did in applying artificial intelligence to predict something deadly serious: the likelihood that a patient will suffer a heart attack or stroke. The researchers made these determinations by examining images of the patient’s retina.

Google, which is presenting its findings Monday in Nature Biomedical Engineering, an online medical journal, says that such a method is as accurate as predicting cardiovascular disease through more invasive measures that involve sticking a needle in a patient’s arm.

At the same time, Google cautions that more research needs to be done.

According to the company, medical researchers have previously shown some correlation between retinal vessels and the risk of a major cardiovascular episode. Using the retinal image, Google says it was able to quantify this association and 70% of the time accurately predict which patient within five years would experience a heart attack or other major cardiovascular event, and which patient would not. Those results were in line with testing methods that require blood be drawn to measure a patient’s cholesterol.

Google used models based on data from 284,335 patients and validated on two independent data sets of 12,026 and 999 patients.

“The caveat to this is that it’s early, (and) we trained this on a small data set,” says Google’s Lily Peng, a doctor and lead researcher on the project. “We think that the accuracy of this prediction will go up a little bit more as we kind of get more comprehensive data. Discovering that we could do this is a good first step. But we need to validate.”

Peng says Google was a bit surprised by the results. Her team had been working on predicting eye disease, then expanded the exercise by asking the model to predict from the image whether the person was a smoker or what their blood pressure was. Taking it further to predicting the factors that put a person at risk of a heart attack or stroke was an offshoot of the original research.

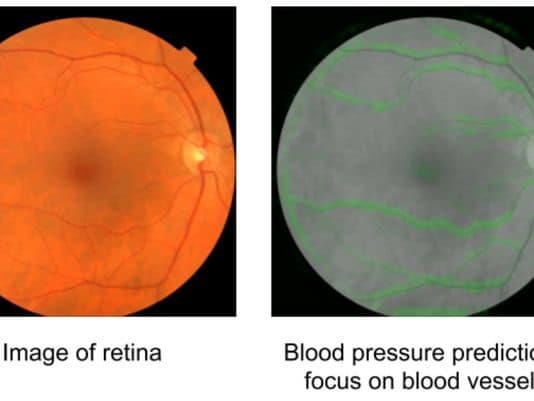

Google’s technique generated a “heatmap,” or graphical representation of data that revealed which pixels in an image were the most important for predicting a specific risk factor. For example, Google’s algorithm paid more attention to blood vessels for making predictions about blood pressure.

“Pattern recognition and making use of images is one of the best areas for AI right now, says Harlan M. Krumholz, a professor of medicine (cardiology) and director of Yale’s Center for Outcomes Research and Evaluation, who considers the research a proof of concept.

It will “help us understand these processes and diagnoses in ways that we haven’t been able to do before,” he says. “And this is going to come from photographs and sensors and a whole range of devices that will help us essentially improve the physical examination and I think more precisely hone our understanding of disease and individuals and pair it with treatments.”

Should further research pan out over time, physicians, as part of routine health check-ups, might study such retinal images to help assess and manage patients’ health risks.

How long might it take?

Peng says it is more in the “order of years” than something that will happen over the next few months. “It’s not just when it’s going to be used, but how it’s going to be used,” she says.

But Peng is optimistic that artificial intelligence can be applied in other areas of scientific discovery, including perhaps in cancer research.

Medical discoveries are typically made through what she says is a sophisticated form of “guess and test,” which means developing hypotheses from observations and then designing and running experiments to test them.

But observing and quantifying associations with medical images can be challenging, Google says, because of the wide variety of features, patterns, colors, values and shapes that are present in real images.

“I am very excited about what this means for discovery,” Peng says. “We hope researchers in other places will take what we have and build on it.”